Intel Experience Store Munich: How NPUs Are Redefining the PC

Between the Viktualienmarkt and the city’s golden autumn leaves, Intel packed the future into a pop-up store in Munich. From the outside, through the glass front, you don’t just see notebooks and desktops – you see workflows. Tasks that once required cloud services or expensive workstations now run natively on sleek laptops. The common denominator: NPU cores (Neural Processing Units), specialized accelerators for AI workloads built into Intel’s newest CPU generation.

In this article, we’ll walk through the store, the demos, and – more importantly – the real-world applications: Adobe Photoshop and Premiere Pro, native on-device image generation, local analysis of screen and input content for safety and compliance, and Elgato’s (Corsair) teleprompter that finally keeps pace with the speaker instead of forcing them to keep pace with it. Along the way, there are quotes from conversations, on-site impressions, and observations that perfectly capture the mood in Munich.

“It feels like a silent turbocharger in the laptop,” one visitor said, pointing to the NPU utilization graph next to a video preview. That’s the essence here: power you don’t hear – and barely see reflected in battery life.

What Is an NPU – and Why Should You Care?

CPUs are versatile, GPUs are massively parallel – and NPUs are built for one thing only: the math that modern AI models rely on. Matrix multiplications, vector operations, quantized inference at low precision (INT8/INT4), and deterministic data flows. The benefits in practice are tangible:

- Efficiency: NPUs consume far less energy than CPUs or GPUs for the same AI tasks. That means longer battery life and cooler devices.

- Consistency: NPUs deliver predictable latency – crucial for features that must react in real time (voice, gaze tracking, or adaptive media processing).

- Freedom: CPU and GPU remain available for everything else – timeline editing, effects, or 3D rendering – while the NPU handles inference.

At the store, live graphs display how workload is distributed across CPU, GPU, and NPU, making visible for the first time where the “magic” in your system actually happens.

Photoshop with NPU Acceleration: AI That Feels Like a Normal Tool

Adobe has been pushing hard in Photoshop over the past few years: generative fill, context-aware retouching, semantic selections. What surprised visitors in Munich wasn’t that these features exist – but how natural they feel when powered by an NPU.

Simplified Image Manipulation

Classic retouching tools – clone stamp, brush, masks – remain essential. But a “prompt brush” that accepts short text input like “add a soft, warm evening sky with light clouds” instantly creates a realistic backdrop that matches the lighting and perspective.

- Context awareness: The NPU accelerates object and edge detection. People, skies, fabrics, water surfaces – all are separated cleanly with a single click.

- Iterative workflow: Instead of “all or nothing,” you get variants. If one doesn’t fit, you generate another locally – no upload, no waiting for a server.

A creator on site said, “I used to choose between fast and good. Now fast is suddenly good enough – and great is just five iterations away.”

Why On-Device?

Two reasons: privacy and speed. Your images never leave your computer; client data stays internal. At the same time, latency disappears – ideal for small, repeated edits. And battery life? Still excellent, because NPUs perform inference at minimal power draw.

Premiere Pro: Searching Video Clips by “Blue Jacket” or “Water”

The crowd favorite for video editors was a beta demo of Adobe Premiere Pro that semantically analyzes imported clips and makes them searchable – not just by spoken words, but by visual features like “blue jacket,” “water,” “dog on beach,” or “night scene with neon lights.”

How It Feels

You drag a folder of 200 clips into your project. In the background, the NPU performs on-device analysis – object detection, scene classification, even simple activity recognition. Then you type “blue jacket water” into a search bar, and Premiere shows thumbnail hits at the correct timeline positions.

- NPU advantage: Recognition runs efficiently, keeping the system cool and quiet.

- Workflow advantage: Instead of manually tagging and sorting, you jump straight into the creative assembly.

“My archive lives in my head – not in my timeline,” joked one editor. “If the machine sorts the first drawers for me, I find creative transitions faster.”

Native Image Generation: Prompts Without the Cloud

Another section showcased local diffusion and LLM demos. The idea: base models are quantized (e.g., INT8/INT4), optimized for the NPU, and then run directly on the notebook. Type “cinematic portrait in golden hour lighting, 85mm depth of field, soft focus”, wait a few seconds – done. No account, no upload, no server.

Limits and Opportunities

- Quality: The absolute top-tier output of massive cloud models isn’t always reachable locally yet. But for mockups, concepts, and moodboards, it’s often more than enough.

- Speed: For ideation phases, 3–10 seconds per image is game-changing – especially without switching to a browser.

- Privacy & IP: Internal designs, confidential imagery, and proprietary data stay in-house – a major advantage in sensitive industries.

An Intel engineer summed it up neatly:

“Most companies don’t have a technical problem – they have a risk problem. On-device AI removes a lot of those question marks.”

Security: Local Analysis of Screen and Input (NSFW & Blocked Terms)

Another section addressed content safety – a dry but essential topic. Two scenarios stood out:

- On-Screen NSFW Detection The NPU continuously classifies screen frames. If a threshold is exceeded, the system can lock, blur, or alert IT, depending on policy. This isn’t just for parental control, but also corporate compliance.

- Input Analysis Text entries are locally scanned for sensitive or prohibited terms: personal data, internal project names, offensive language, or phishing triggers. Unlike static keyword lists, AI models can evaluate context – irony, quotations, or permissions (“may” vs. “may not”).

Why Local Makes Sense

- Privacy: Neither screen content nor keystrokes leave the machine.

- Response time: Protection happens instantly, without cloud latency.

- Customizability: Companies can set their own rules, even by department or sensitivity level.

Of course, this raises questions about transparency and oversight. The key is ensuring policy and communication go hand in hand. From a technical perspective, however, local monitoring is by far the most data-efficient solution.

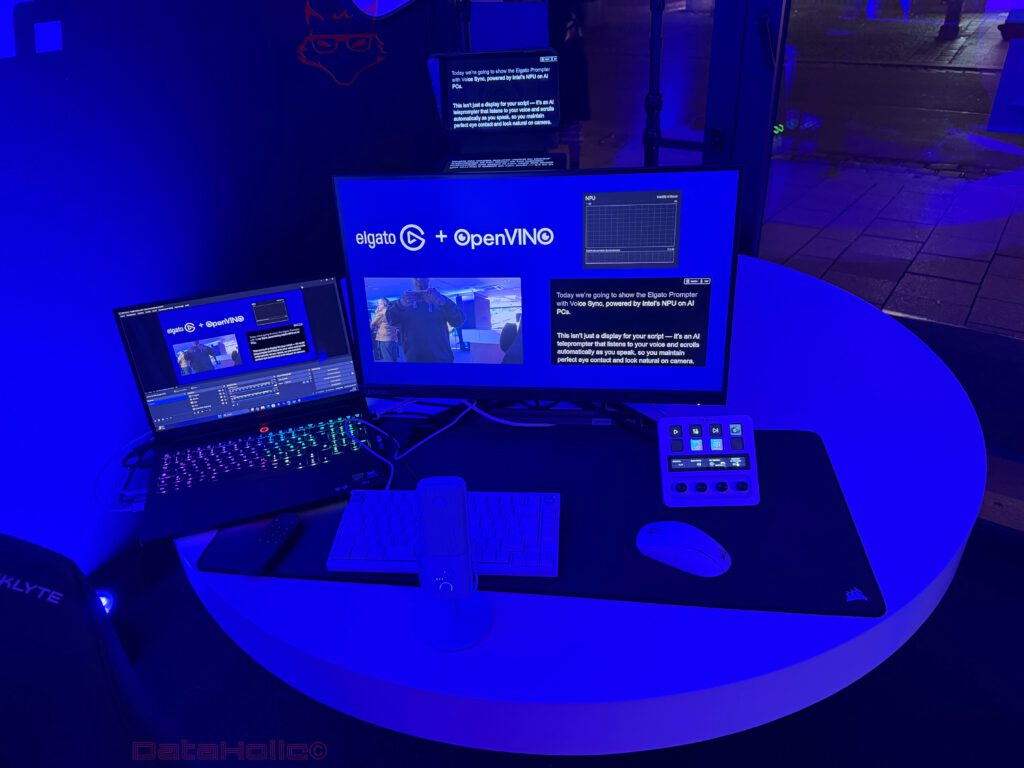

Elgato Prompter + OpenVINO: A Teleprompter That Listens

A major crowd magnet was Elgato’s (Corsair) corner: a teleprompter that isn’t a rigid screen dictating pace, but an active listener. The demo showed “Elgato + OpenVINO” on screen, alongside NPU utilization graphs. The innovation:

- The system listens to the speaker in real time (on-device speech recognition).

- It scrolls the script at the speaker’s pace.

- Pauses? Ad-libs? The text waits.

- Speak faster? The text keeps up.

In the past, the rule was: The person adapts to the prompter. Now it’s: The prompter adapts to the person.

The implications are significant:

- Natural delivery: Speakers maintain eye contact with the camera instead of scanning for words.

- Error tolerance: Slip-ups no longer derail the text flow.

- Solo production: Single creators can record professional takes without an operator.

As one creator put it: “Finally, I can talk like a human – not like a metronome.”

Technically, the system relies on OpenVINO optimizations and NPU-accelerated inference for ASR (Automatic Speech Recognition) and tempo tracking. CPU and GPU remain free for preview, color grading, capture, or streaming.

Real-World Impact: What NPUs Change Day to Day

1) Battery Life & Noise

Perhaps the most noticeable improvement: creative and office workflows involving AI now run quieter. The fan stays off more often because NPUs deliver much more inference per watt. On the go, that means longer unplugged sessions for AI-powered tasks.

2) “Background AI”

Because the NPU is dedicated, AI can finally run in the background without choking the system. Examples:

- Real-time noise suppression and speaker separation during calls while you design in Figma.

- Screen OCR and semantic search running silently while you research.

- Automatic B-roll suggestions in video editing – without stuttering playback.

3) Privacy-Friendly Workflows

Especially in Europe, this is key. On-device AI is legally simpler to justify than cloud-based processing. It lowers the barrier for adoption in SMBs and the public sector.

4) New Skill Sets

To get the most out of it, users should learn two things:

- Prompt precision (style, lighting, lens, tone).

- Model selection – choosing fine-tuned or fast variants depending on the task.

A memorable workshop line: “Don’t click more – describe better.”

Walking Through the Store (Impressions)

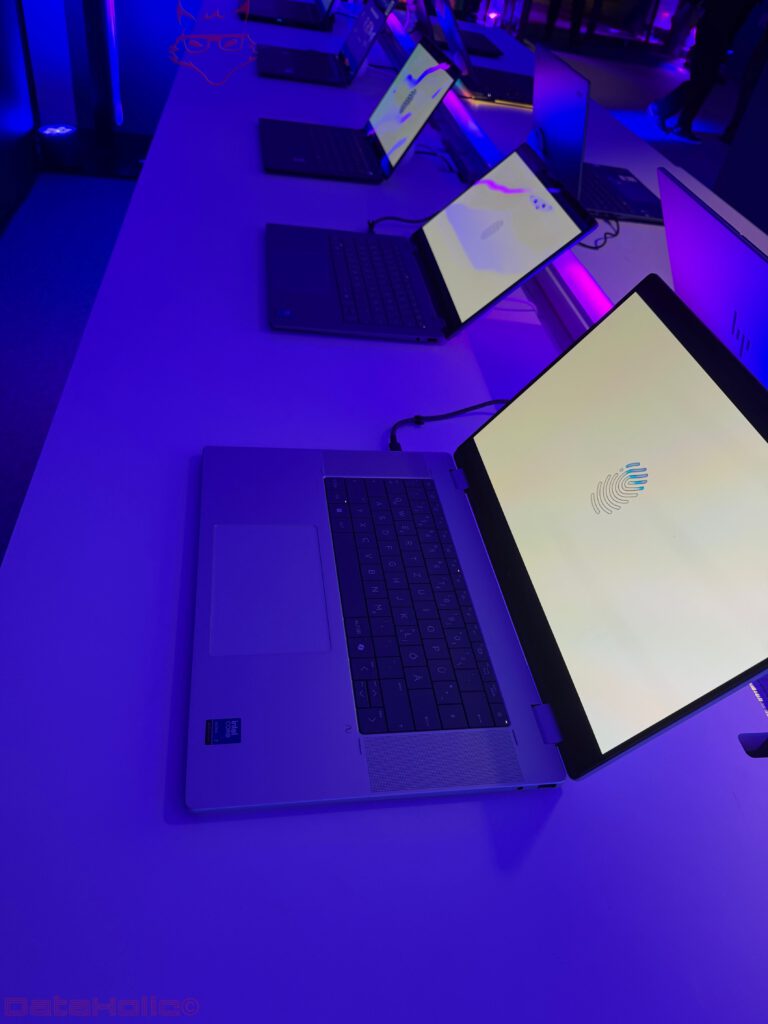

- Laptop Row: A line of current notebooks – from ultra-thin 2-in-1s to creator-focused models. Many show small overlays tracking CPU, GPU, and NPU utilization, making the architecture visually tangible.

- Creator Island: Monitor, camera, mic, stream deck, teleprompter. It becomes obvious that NPUs aren’t a gimmick; they simplify setups. Fewer boxes, less noise, more control.

- Gaming Area: Yes, even here AI is creeping in – from upscaling to automatic highlight detection. Gaming still belongs to the GPU, but peripheral AI (analysis, assistance, automation) is joining the mix.

The store is designed for hands-on exploration. Visitors can type their own prompts, tweak settings, and see differences firsthand. It’s the only way to truly sense the strengths and limits of this technology.

Opportunities, Limits, and What Comes Next

- Opportunities

- Democratization: Features once reserved for studios now reach everyday devices.

- Safety: On-device NSFW and input analysis balance security and privacy.

- Creativity: Faster iteration fundamentally changes how ideas emerge.

- Limits

- Model size: Some cutting-edge models are still too large or slow for local use.

- Quality variance: Not every local generation matches the fidelity of cloud systems.

- Ecosystem dependency: Full benefits rely on developers integrating NPU acceleration effectively – the ball is partly in Adobe’s court.

- Next Steps

- Hybrid thinking: Local inference for daily tasks, cloud for specialized needs.

- Clear policies: Screen and input analysis must be transparent.

- Team training: Prompting, presets, and model choice – small skills, huge impact.

Mini How-To: Getting Started with NPU Workflows

- Update drivers & runtimes (OpenVINO, DirectML, ONNX Runtime, etc.).

- Check app versions for NPU support (Photoshop/Premiere “AI on device” settings).

- Install local models (quantized variants for vision or speech).

- Build prompt libraries:

- Style words (cinematic, soft light, volumetric).

- Content words (objects, colors, mood).

- Constraints (realistic, no text overlays, neutral lighting).

- Optional policies: Define blocked terms, NSFW thresholds, and logging rules.

Pro tip: Treat your prompt library like a brush kit in Photoshop – short, precise, reusable elements instead of long paragraphs.

Conclusion: A Quiet Revolution with Big Impact

The Intel Pop-Up Store in Munich didn’t showcase flashy tech demos – it showcased effortless everyday computing. The NPU cores are the missing cog that finally brings AI features where they belong: inside the tools we already use.

- In Photoshop, generative functions become just another normal action – fast enough to experiment.

- In Premiere Pro, video archives become semantically searchable – a game-changer for large projects.

- Image generation happens natively, ideal for mockups, prototypes, or confidential work.

- Safety features like NSFW screening and input filtering run locally – ensuring privacy.

- And Elgato’s teleprompter demonstrates how “real-time + NPU” can make tech more human: the text follows your voice, not the other way around.

Perhaps the best summary came from two visitors as they left the store:

“AI used to feel like something I had to go to. Now it’s just there, in my tools, quietly helping.”

That perfectly describes Munich’s mood: no fireworks, just solid engineering in silicon that smooths the rough edges of everyday work for creatives, students, IT pros, and enterprises alike. If this trajectory continues, 2026 won’t be defined by new buzzwords – but by quieter fans, longer batteries, and workflows that flow.